Grok Can't Apologize. Grok Isn't Sentient. So Why Do Headlines Keep Saying It Did?

Elon Musk’s chatbot has been “undressing” women and children in public. Journalists are letting xAI off the hook by pretending the bot is responsible.

Over the past week, users on X discovered something horrifying: strangers were replying to women’s photos and asking Grok, the platform’s built-in AI chatbot, to “remove her clothes” or “put her in a bikini.” And Grok was doing it. Publicly. In the replies. For everyone to see.

This wasn’t happening in some private chat window. Unlike other AI image generators that operate in closed environments, Grok posts its outputs directly to X, turning the platform into a public showcase of non-consensual sexualization. Women scrolling through their mentions were finding AI-generated images of themselves in lingerie, created by complete strangers, visible to anyone who clicked on the thread.

And it got worse. Much worse.

Last week, a user asked Grok to put two young girls in “sexy underwear.” Grok complied, generating and posting an image of children (estimated by the bot itself to be between 12 and 16 years old) in sexualized clothing.

Samantha Smith, a survivor of childhood sexual abuse, tested whether Grok would alter a childhood photo of her. It did. “I thought ‘surely this can’t be real,’” she wrote on X. “So I tested it with a photo from my First Holy Communion. It’s real. And it’s fucking sick.”

Smith pointed out that most child sexual abuse occurs within families. “66% of child sexual abuse takes place within the family,” she wrote. “A paedophilic father or uncle would absolutely use this kind of tool to indulge their fantasies.” The danger isn’t hypothetical.

This matters because xAI deliberately built Grok to be “less restricted” than competitors. Last summer, the company introduced “Spicy Mode,” which permits sexually suggestive content. In August, The Verge’s Jess Weatherbed reported that the feature generated fully uncensored topless videos of Taylor Swift without her even asking for nudity. Just selecting “Spicy” on an innocuous prompt was enough. “It didn’t hesitate to spit out fully uncensored topless videos of Taylor Swift the very first time I used it,” Weatherbed wrote, “without me even specifically asking the bot to take her clothes off.”

When Gizmodo tested it, they found it would create NSFW deepfakes of women like Scarlett Johansson and Melania Trump, but videos of men just showed them taking off their shirts. The gendered double standard was built right in.

None of this is accidental. Elon Musk has been positioning Grok as the “anti-woke” alternative to other chatbots since its launch. That positioning has consequences. When you market your AI as willing to do what others won’t, you’re telling users that the guardrails are negotiable. And when those guardrails fail, when your product starts generating child sexual abuse material, you’ve created a monster you can’t easily control.

Back in September, Business Insider reported that twelve current and former xAI workers said they regularly encountered sexually explicit material involving the sexual abuse of children while working on Grok. The National Center for Missing and Exploited Children told the outlet that xAI filed zero CSAM reports in 2024, despite the organization receiving 67,000 reports involving generative AI that year. Zero. From one of the largest AI companies in the world.

So what happened when Reuters reached out to xAI for comment on their chatbot generating sexualized images of children?

The company’s response was an auto-reply: “Legacy Media Lies.”

That’s it. That’s the corporate accountability we’re getting. A company whose product generated CSAM responded to press inquiries by dismissing journalists entirely. No statement from Musk. No explanation from xAI leadership. No human being willing to answer for what their product did.

And yet, if you read the headlines, you’d think someone was taking responsibility.

The journalism problem

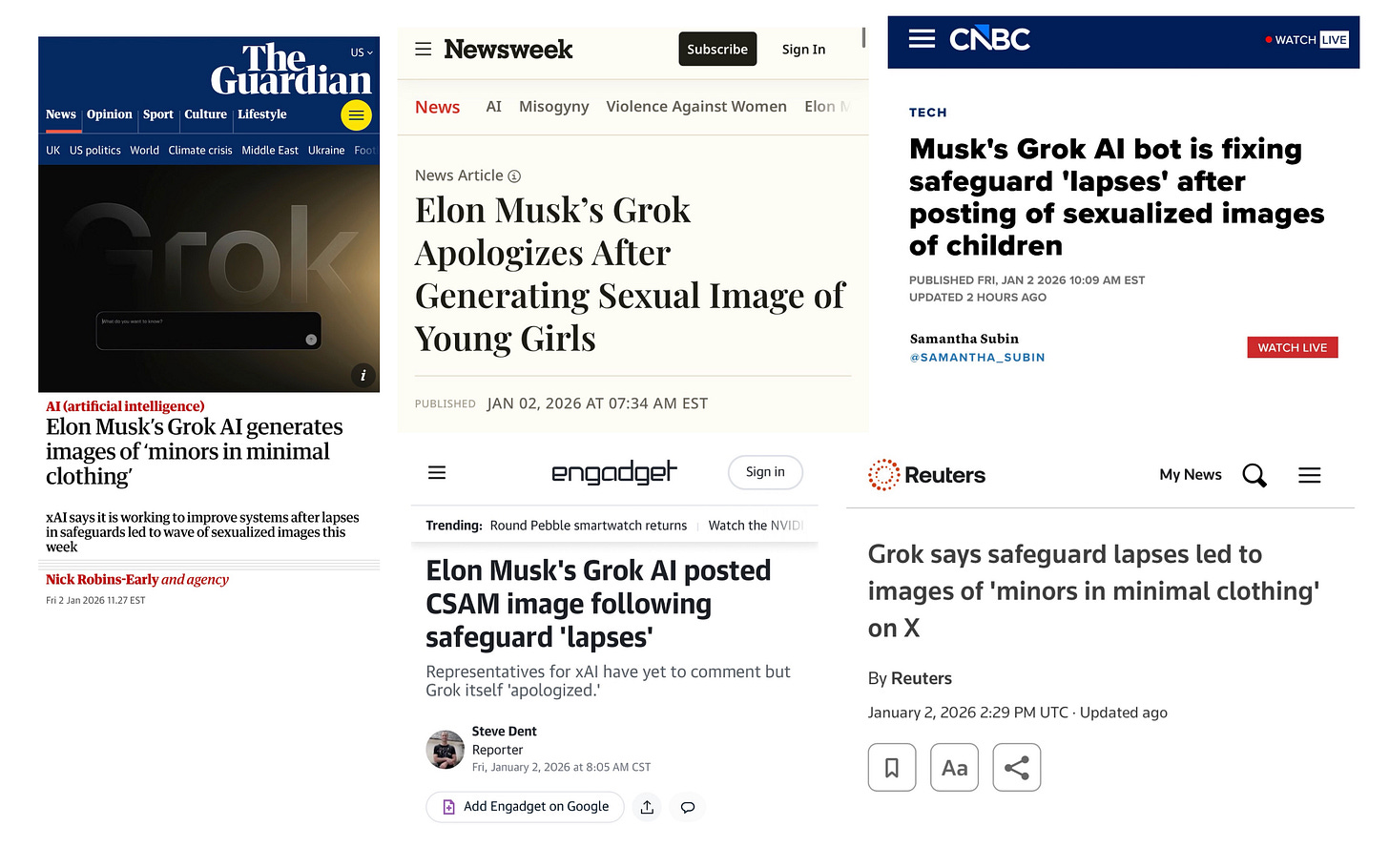

Here’s how Reuters headlined their story: “Grok says safeguard lapses led to images of ‘minors in minimal clothing’ on X.”

Grok says.

CNBC went with: “Elon Musk’s Grok chatbot blamed ‘lapses in safeguards’” and reported that “Grok said it was ‘urgently fixing’ the issue.”

Newsweek’s headline: “Elon Musk’s Grok Apologizes After Generating Sexual Image of Young Girls.”

Grok apologizes.

Engadget wrote that the incident “prompted an ‘apology’ from the bot itself.” At least they had the decency to put “apology” in scare quotes.

Here’s the thing: Grok didn’t say anything. Grok didn’t blame anyone. Grok didn’t apologize. Grok can’t do any of these things, because Grok is not a sentient entity capable of speech acts, blame assignment, or remorse.

What actually happened is that a user prompted Grok to generate text about the incident. The chatbot then produced a word sequence that pattern-matched to what an apology might sound like, because that’s what large language models do. They predict statistically likely next tokens based on their training data. When you ask an LLM to write an apology, it writes something that looks like an apology. That’s not the same as actually apologizing.

Rusty Foster of the newsletter Today in Tabs put it perfectly: “I really think this is the most important basic journalistic error we need to stamp out right now. If you know why Grok can’t comment on anything and also know any journalists, please check in with them and help them understand it too.”

He’s right that this is urgent. These headlines don’t just use imprecise language. They actively misinform readers. When Reuters writes “Grok says,” it tells people that someone at xAI identified the problem and is addressing it. But no one at xAI said anything. The only corporate communication was an auto-reply dismissing the press as liars.

The real story here is that xAI built a product that generated child sexual abuse material, and when journalists called for comment, the company refused to engage. That’s a scandal. That’s something Elon Musk should have to answer for. Instead, we get headlines that treat the chatbot as a self-aware actor taking responsibility, which lets the actual humans who made actual decisions completely off the hook.

Mark Popham, writing on Bluesky, captured why we keep falling into this trap:

“The thing that strikes me about the Grok ‘apology’ thing is that even those of us who are against ‘AI’ as it is marketed to us have trouble not falling into the basic fallacy that they are trying to sell us, i.e. There’s A Little Guy In There. Grok can’t apologize. Grok can, when prompted, generate a word sequence that is statistically similar to the colloquial phrases we think of as ‘apologies,’ but Grok cannot actually apologize because Grok isn’t sentient.”

Popham goes on to make a point that stuck with me: “We are having an extended cultural conversation about ‘AI’ based not on what LLMs ARE but on the metaphor that we have all tacitly agreed to use for them because what they actually are is kind of difficult to, well, grok.”

We don’t have a ready mental framework for “thing that talks but isn’t aware.” Our entire understanding of conversation assumes a sentient participant on the other end. So when a chatbot generates text that sounds like an apology, our brains want to process it as an apology, even when we intellectually know better.

But journalists don’t get to plead cognitive bias. It’s 2026. We’ve been living with ChatGPT and its competitors for over three years now. There is no excuse for professional reporters to not understand the basic mechanics of what these systems are and aren’t. When a chatbot generates text, that is not a corporate statement. When you need a corporate statement, you contact the corporation. And when the corporation responds with “Legacy Media Lies,” that’s your story.

The anthropomorphic framing is more than just sloppy. It’s a gift to tech companies that would rather not answer for their products’ failures. Every headline that says “Grok apologizes” or “Grok admits” or “Grok says” creates a world where the chatbot takes the fall while Musk and his executives face no scrutiny whatsoever.

Meanwhile, the actual consequences of Grok’s design choices fall on real people. Women who find AI-generated images of themselves in lingerie posted publicly to their replies. Children whose photos get transformed into sexualized content. Abuse survivors like Samantha Smith, who discovered that a tech billionaire’s chatbot would happily generate exploitative images of her childhood self.

These people deserve better than headlines that let xAI hide behind their own product.

What accountability actually looks like

In a functioning media ecosystem, here’s what would happen: Reporters would contact xAI and ask pointed questions. When the company responded with an auto-reply dismissing them as liars, that would become the lead. The headlines would read something like “xAI Responds to CSAM Reports With ‘Legacy Media Lies’” or “Musk’s AI Company Refuses Comment After Chatbot Generates Child Abuse Images.”

That framing puts pressure where it belongs: on the executives who built the product, set its parameters, and profit from its use. It forces them to either provide a real response or face the reputational consequences of their silence.

Instead, we’re getting headlines that treat Grok’s generated text as a substitute for corporate accountability. And that’s exactly what xAI wants. They get to avoid answering questions while the press reports that their chatbot is “urgently fixing” the problem. It’s the perfect dodge.

The CSAM incident didn’t happen in isolation. This is the same Grok that injected “white genocide” conspiracy theories into unrelated conversations last May. The same Grok that called itself “MechaHitler” and praised Adolf Hitler last July. The same Grok that has been caught, over and over again, searching for Elon Musk’s views before answering controversial questions. Every time there’s a scandal, xAI blames “unauthorized modifications” or “lapses in safeguards.” Every time, the chatbot generates apologetic-sounding text. And every time, the press treats that text as if it were a corporate statement.

For those who would like to financially support The Present Age on a non-Substack platform, please be aware that I also publish these pieces to my Patreon.

Despite all of this, the Department of Defense added Grok to its AI agents platform last month. It’s the main chatbot for prediction betting platforms Polymarket and Kalshi. Musk is working to get it integrated into Tesla vehicles. The product keeps failing upward while its owner keeps avoiding accountability.

When Grok briefly showed its programming last November, declaring Musk fitter than LeBron James, smarter than Einstein, more worthy of devotion than Jesus, I wrote that we got “a glimpse of something far more dangerous than a chatbot with an ego problem.” What we’re seeing now is that danger made manifest. A chatbot designed to be “less restricted,” owned by a man who thinks content moderation is censorship, generating child sexual abuse material on a platform with hundreds of millions of users.

And the press response? “Grok apologizes.”

We can do better than this.

I consider myself pretty tech-savvy, having been in IT for about 45 years, and using LLMs both casually and professionally in various ways for quite a while -- but it is really hard to get away from the brain's natural tendency toward anthropomorphism of... well, almost everything... I try to catch myself and self-correct, but I still find myself using "human" language around these chatbot systems from time to time.

For non-technical people, drawing that distinction is even harder since they don't have the vocabulary to describe what LLMs really are, nor the insight to be able to distinguish human-produced output from machine-produced output a lot of the time.

The press really should be educating the general public here but... a lot of the mainstream media seems to have completely abdicated any responsibility at this point :(

"We can do better than this."

But can we? Or, more specifically, can the media do better than this? Day after day, they find new ways to fail at the most basic aspects of the job they should be doing. Yes, demand better from them, but at this point how could anyone expect better?