“That's AI” (It Isn’t)

Verified video of Alex Pretti dismissed as fake by people who think they’re fighting misinformation. Meanwhile, AI-generated content spreads as proof.

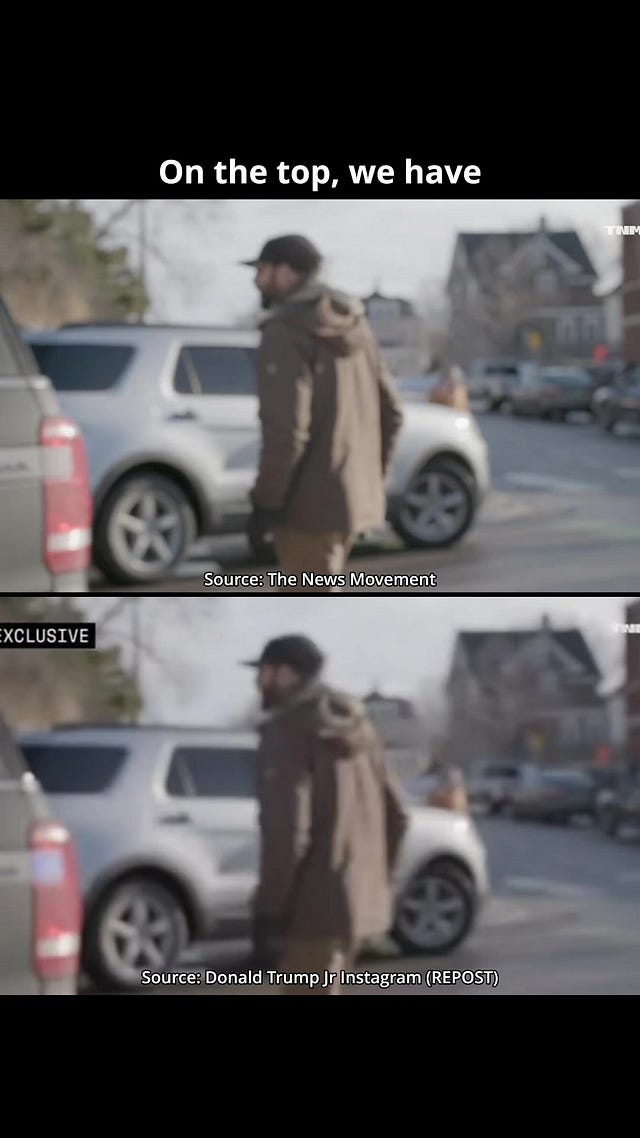

On Wednesday, The News Movement published footage that adds new context to the killing of Alex Pretti, the 37-year-old ICU nurse who was shot by federal agents in Minneapolis on January 24.

The video was filmed on January 13, eleven days before Pretti’s death. It shows him in a confrontation with federal immigration agents at the corner of East 36th Street and Park Avenue in south Minneapolis. The News Movement’s team had been filming a documentary about ICE activity in the city when they received a tip that agents were blocking a street, and they headed to the scene.

What they captured is tense and chaotic. Pretti, wearing a dark baseball cap and brown winter coat, is yelling at federal agents as they prepare to leave in a black Ford Expedition with its lights flashing. He appears to spit toward the driver’s side of the vehicle. As the SUV starts pulling away, he kicks at the taillight, then delivers a second kick that shatters the red plastic casing.

That’s when things escalate. An agent in a gas mask and helmet gets out of the vehicle, grabs Pretti by the jacket, and pulls him to the ground. Other agents join in. Pretti’s coat comes off during the struggle. He either breaks free or the agents let him go, and he scrambles away. When he turns, you can see what appears to be a handgun tucked into the back of his waistband. He never reaches for it. The agents deploy tear gas and pepper balls toward the crowd of onlookers, many of whom are filming on their phones. Then the agents retreat to their vehicles and drive away.

Pretti walks away, too. He wasn’t arrested. According to CNN’s reporting, he suffered a broken rib during a confrontation with federal agents approximately a week before his death, and medical records reviewed by CNN showed medication consistent with that injury. It’s unclear whether this is the same incident, but the timeline matches up.

The video’s authenticity has been verified thoroughly. The BBC’s verification unit used facial recognition technology and found a 97% match. CBS News confirmed the footage was filmed in Minneapolis. A spokesperson for Pretti’s family confirmed to CNN, KARE11, and the Minnesota Star Tribune that the man in the video is Pretti. The Associated Press confirmed with a person who has direct knowledge of the situation that Pretti had told his family about the confrontation. And there’s a second video of the same incident, filmed by Max Shapiro, a Minneapolis attorney who witnessed it, that corroborates what The News Movement captured.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

Professional journalists who were there. One of the most respected news verification units in the world. The dead man’s own family. An independent eyewitness with his own footage. The Associated Press. All confirming the same thing. The video is real.

The right seizes on it

Conservatives treated the video like a vindication.

Trump shared the clip on Truth Social without comment, then reposted a supporter’s message that read “Such a peaceful protestor” with skeptical emojis. Donald Trump Jr. posted it with the caption “just a peaceful legal observer?!?!?” Benny Johnson declared “LEFTIST HOAX DESTROYED” and called Pretti “a violent agitator and psychopath hellbent on attacking federal law enforcement.”

Megyn Kelly went further. “Alex Pretti was itching for another confrontation with Border Patrol, whom he’d been stalking, harassing and terrorizing,” she wrote on X. “HE had been victimizing THEM. His felonies are on tape. He was reckless, and it cost him his life. Find another poster boy, illegal-loving Leftists.” Turning Point USA’s Savanah Hernandez called him “a menace to society.” Tim Pool described him as a “known violent extremist.”

The message, stated plainly or implied, is that the video proves Pretti got what was coming to him. He was aggressive. He was looking for trouble. He was the kind of person who kicks a cop car and spits at agents. What did he expect?

Here’s what the video actually shows: a man in a confrontation with federal agents who kicks out a taillight, gets tackled to the ground, and then walks away. What it doesn’t show: Pretti brandishing a weapon. Pretti attacking anyone. Pretti doing anything that would carry the death penalty in any jurisdiction in the United States.

Eleven days later, he was shot at least ten times.

Crooked Media’s Jon Favreau responded to Kelly’s post: “Federal agents shot him ten times after disarming him, Megyn. If you’d like to live in a country where the punishment for kicking a taillight is a public execution, you’re free to leave America.”

It’s worth pausing on something Tim Pool raised, perhaps unintentionally. “Could he have been known to the agents as they attempted to arrest him?” he asked, framing it as damning context about Pretti.

But think about what that question actually implies.

Pretti was wearing the same coat and the same black hat on January 24 that he wore on January 13. It’s not yet known whether any of the agents who shot Pretti on January 24 were present during the January 13 incident. The Department of Homeland Security has told the Daily Wire it has no record of the earlier confrontation, which is strange given that there’s now verified video of agents tackling a man to the ground and deploying chemical irritants into a crowd of bystanders.

If the agents who killed Pretti recognized him from eleven days earlier, that’s not a point in the government’s favor. It raises the question of whether he was targeted. Pool probably didn’t intend to suggest that federal agents executed a man they recognized from a previous encounter in which he kicked their car, but that’s where the logic leads if you follow it.

How some on the left responded

Not everyone engaged with the substance of what the video shows. For a number of people, there was a simpler explanation at hand.

“It’s AI.”

“This is AI. JFC people are stupid.”

“Stop spreading AI videos.”

“Fake asf.”

“This is AI slop video - stop giving it oxygen please.”

“Oh honey... this is completely fabricated.”

The comments piled up beneath posts sharing the footage, a chorus of confident dismissal. Some invoked the source as the tell: “Just saw a MAGA troll account post this. It’s obviously AI. When you have to create an AI video to justify something, you know you’re on the wrong side.” Others set evidentiary standards that no video could possibly meet: “Unless I see under oath testimony from the person who shot this video and at least one other witness, I am going to discount it as AI.”

Some framed their skepticism as a moral stance, a refusal to be fooled: “AI. Nice try, Russia.”

And some got angry at anyone who suggested the video might be worth taking seriously: “The fact that you’ve shared and given a platform to this very obvious AI doctored video is appalling. Fuck you!!!”

What I noticed about so many of these responses is the certainty. There’s no hedging, no “this looks suspicious” or “I’d want to see this verified before I believe it.” The video is fake. Obviously fake. So obviously fake that anyone sharing it is either a dupe or a collaborator.

One comment, in particular, stuck with me: “We’re already fighting the gaslighting. We don’t need them to have fake evidence.”

That commenter believes they’re on the right side of an information war. They’ve learned the lessons of the past decade. They know that bad actors flood the zone with AI-generated slop. They know that foreign influence operations seed fake content to divide Americans. They know that MAGA accounts share misleading videos to smear people.

All of those things are true. And in this case, the video is also real.

Meanwhile, actual AI content spreads as evidence

While verified video gets dismissed as fake, actual AI-generated content about this same shooting has been created, shared, and treated as though it were real.

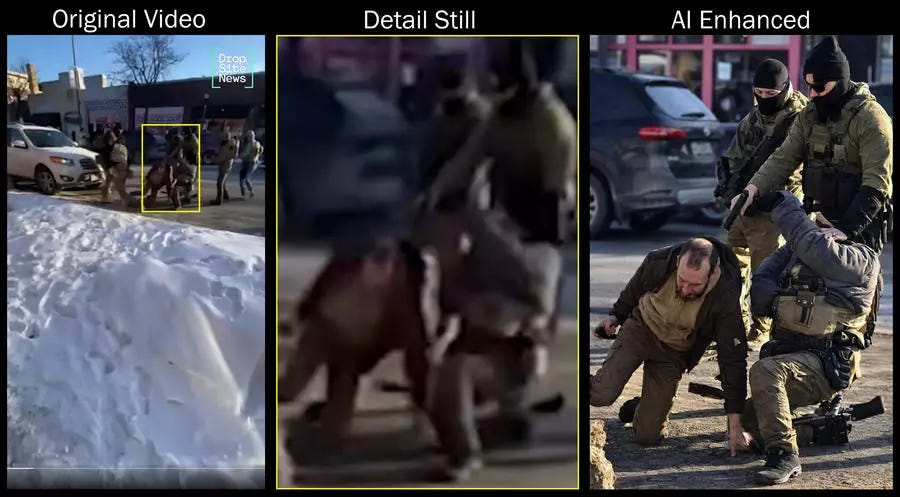

Start with the “enhanced” still frame from the shooting itself. Shortly after Pretti was killed, someone ran a blurry screenshot from one of the bystander videos through an AI image enhancement tool and posted the result. The image went viral. People shared it as proof of what happened in the moments before Pretti was shot. Some claimed they could see a gun in his hand.

Lead Stories fact-checked the image and found it riddled with hallucinations. The AI had rendered one ICE agent with no head and no hands. A boot appeared as a chunk of ice. A wedding ring materialized on a hand that doesn’t exist in the original footage. The supposed gun some viewers thought they saw in Pretti’s hand? Frame-by-frame review of the original videos confirmed that Pretti had already been disarmed by another agent at that moment. The weapon was hallucinated.

That was just the beginning.

This week, another video emerged claiming to show Pretti’s gun misfiring as an agent ran with it, complete with vivid muzzle flash, smoke, and sparks. The caption described it as “AI enhanced.” Lead Stories examined it and concluded that the label was misleading. The correct term, they wrote, is “AI-generated.” The muzzle flash, smoke, and sparks were fabricated entirely. They don’t appear in any of the original footage. The video didn’t enhance what was there; it invented something that wasn’t.

Beyond the shooting footage itself, a sprawling ecosystem of AI-generated garbage has attached itself to this story. Lead Stories documented what they call “Viet Spam”: a clickbait operation based in Vietnam that uses AI tools to generate fake stories targeting American and European audiences. In the days since Pretti’s death, this operation has published claims that Tom Brady, along with at least 36 other athletes and entertainers, had confirmed Pretti was a relative. That Pretti’s parents said he had quit his job and exhibited “unusual behavior.” That he had been fired from his nursing position over allegations of misconduct. All of it generated by AI. All of it false. All of it shared by people who believed they were spreading the truth.

Real video, called fake. Fake content, shared as real. Often by people in the same political communities, sometimes by the same people, within the same news cycle.

This pattern isn’t new, but it’s spreading

I wrote about this dynamic back in November, when the two faces of this problem showed up in the same news cycle.

On one side, Trump pointed at archival footage of Ronald Reagan and declared it “probably AI.” The footage, from the National Archives, showed Reagan advocating for free trade in terms that directly contradicted Trump’s tariff policy. Ontario Premier Doug Ford had used it in an ad. Rather than engage with the substance, Trump just waved his hand and said “AI or something.” His press team had, days earlier, told reporters that a different embarrassing video was real. He called that one AI too. Months earlier, he admitted: “If something happens that’s really bad, maybe I’ll have to just blame AI.”

On the other side, Fox News ran AI-generated videos of Black women claiming to be SNAP recipients, presenting them as real news. The videos featured women saying things like “I have seven different baby daddies and none of ‘em no good for me.” The videos had Sora watermarks. Nobody actually talks like that. The content was racist caricature generated by a machine. But it confirmed what Fox’s audience wanted to believe, so the site ran with it until they got caught and quietly rewrote the whole piece.

Legal scholars Danielle Citron and Robert Chesney identified this problem back in 2018, calling it “the liar’s dividend.” The idea is straightforward: as AI-generated content becomes more prevalent, liars benefit even when they’re not using AI themselves. “If the public loses faith in what they hear and see and truth becomes a matter of opinion,” they wrote, “then power flows to those whose opinions are most prominent.” A skeptical public will be primed to doubt the authenticity of real evidence.

In an interview with the Associated Press, digital forensics expert Hany Farid, a professor at UC Berkeley, put it plainly: “I’ve always contended that the larger issue is that when you enter this world where anything can be fake, then nothing has to be real. You get to deny any reality because all you have to say is, ‘It’s a deepfake.’”

When I wrote that piece, I was focused on the powerful: a president and a major news network, each exploiting public uncertainty about AI for their own purposes. The liar’s dividend seemed like a tool of institutions.

The Pretti case suggests something has shifted. The people calling The News Movement’s video “AI” aren’t politicians with something to hide. They’re not media executives pushing a narrative. They’re regular people on social media, scrolling through their feeds, seeing footage that complicates a story they’re emotionally invested in, and reaching for “that’s fake” as a reflex. The liar’s dividend used to be a strategy. Now it’s just how we process inconvenient information.

The problem isn’t necessarily AI literacy

I want to be careful about how I frame this, because it would be easy to turn this into a piece about people being stupid or gullible or hypocritical. That’s not what’s happening here.

The people dismissing The News Movement’s video as AI aren’t dumb. They’re not bad at spotting fakes. Many of them are probably quite good at it. They’ve spent years learning to be skeptical of viral content. They know that foreign influence operations seed fake videos to divide Americans. They know that AI-generated slop floods social media every day. They know that bad actors create convincing forgeries to manipulate public opinion.

All of that is true. The problem is that they’ve internalized “be skeptical of AI” as a heuristic and they’re deploying it selectively, based on whether the content in question confirms or challenges their existing beliefs.

This is confirmation bias with a new escape hatch.

Confirmation bias isn’t new. Psychologists have studied it for decades. People seek out information that supports what they already believe and discount information that contradicts it. We all do this to some degree. It’s a feature of how human brains process information under conditions of uncertainty.

What’s new is that “it’s AI” has become a socially acceptable, even virtuous-seeming, way to dismiss inconvenient evidence. If you say “I don’t believe that video because it contradicts my worldview,” you sound closed-minded. If you say “I don’t believe that video because it’s obviously AI,” you sound like you’re being a responsible media consumer.

The framing matters. When someone writes “We’re already fighting the gaslighting. We don’t need them to have fake evidence,” they’re not admitting to motivated reasoning. They’re positioning their skepticism as a form of resistance. Calling the video fake becomes an act of solidarity with the right side of history.

And this is where fact-checking runs into a wall.

The video has been verified by the BBC, corroborated by an independent witness with his own footage, and confirmed by Pretti’s own family to multiple outlets. None of this matters if you’ve already decided the video is fake.

That’s the trap. Verification infrastructure only works if people are willing to update their beliefs based on what verification finds. When the response to “the BBC confirmed it” is “of course the BBC would say that,” or when “the family confirmed it” gets met with “they’re probably in on it,” we’ve moved beyond a problem that better fact-checking can solve.

We spent years teaching people to question what they see. We didn’t spend nearly enough time teaching people to question why they believe what they believe.

For those who would like to financially support The Present Age on a non-Substack platform, please be aware that I also publish these pieces to my Patreon.

What this means for what’s coming

Alex Pretti was shot at least ten times by federal agents on a Minneapolis sidewalk on January 24. There is extensive video documentation of his death, filmed from multiple angles by bystanders with cell phones. There is now verified video of an earlier confrontation between Pretti and federal agents, filmed by professional journalists on the scene and corroborated by an independent witness. His family has confirmed the details. Major news organizations have verified the footage. The BBC used facial recognition technology.

And still, nearly a week later, we cannot agree on what is real.

Some people look at the January 13 video and see justification for what happened eleven days later. Some people look at the same video and see a man who was later targeted and killed by agents who may have recognized him. Some people refuse to look at the video at all because they’ve decided it doesn’t exist.

This is one shooting. One dead man. One relatively short and well-documented sequence of events. And the information ecosystem has already fractured into mutually incompatible realities, each with its own set of evidence and its own framework for dismissing the evidence that doesn’t fit.

Now imagine this dynamic applied to the next contested shooting. The next election. The next pandemic. The next anything where people have stakes and “that’s AI” provides a convenient exit from engaging with uncomfortable facts.

We built detection tools. We funded verification units. We taught media literacy. And it turns out none of that matters much when people can simply opt out of reality by invoking the magic words.

I don’t have a solution to offer here. The technology that enables AI-generated content is improving faster than our social capacity to adapt to it. The psychological vulnerabilities that make the liar’s dividend work are deeply rooted in how humans process threatening information. The political incentives that reward reality-denial aren’t going anywhere.

What I can do is name what’s happening. A verified video is being dismissed as fake by people who think they’re fighting misinformation. Fake content is being shared as evidence by people who think they’re documenting the truth. These things are occurring simultaneously, about the same event, sometimes by the same people.

At this point I am genuinely afraid for the survival of our species, now that we seem to have collectively lost our ability to tell reality from fiction :(

This is a vital analysis. Thank you.